Ceph集群搭建

环境准备:

| 主机名 |

IP(外) |

IP(内网) |

| ceph-01 |

192.168.0.114 |

10.1.1.11 |

| ceph-02 |

192.168.0.115 |

10.1.1.12 |

| ceph-03 |

192.168.0.116 |

10.1.1.13 |

1、修改主机名,主机之间相互解析,设置ssh免密登录。

[root@ceph-01 ~]# ssh-keygen ##所有节点生成密钥文件

[root@ceph-03 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub ceph-01 ##在所有节点执行

[root@ceph-01 ~]# scp ~/.ssh/authorized_keys ceph-02:~/.ssh/ ##拷贝公钥到其他节点2、安装epel源和ceph源、更新yum,安装一些工具包和chrond服务。

[root@ceph-01 ~]# yum install -y epel-release

[root@ceph-01 ~]# yum install -y bash-completion net-tools chrony vim telnet

[root@ceph-01 ~]# yum update ##更新yum源,升级内核

###安装ceph源

[root@ceph-01 ~]# cat <<EOF>> /etc/yum.repos.d/ceph.repo

[Ceph]

name=Ceph packages for x86_64

baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/x86_64

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1

[Ceph-noarch]

name=Ceph noarch packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/noarch

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1

[ceph-source]

name=Ceph source packages

baseurl=http://mirrors.aliyun.com/ceph/rpm-luminous/el7/SRPMS

enabled=1

gpgcheck=0

type=rpm-md

gpgkey=https://mirrors.aliyun.com/ceph/keys/release.asc

priority=1

EOF

##设置时钟同步服务服务端配置

[root@ceph-01 ~]# cat /etc/chrony.conf

#server 0.centos.pool.ntp.org iburst

#server 1.centos.pool.ntp.org iburst

#server 2.centos.pool.ntp.org iburst

#server 3.centos.pool.ntp.org iburst

allow 10.1.1.0/24

local stratum 10

##设置时钟同步服务客户端配置

[root@ceph-02 ~]# cat /etc/chrony.conf

server 10.1.1.11 iburst3、关闭所有机器的防火墙和selinux、NetworkMeage。

[root@ceph-01 ~]# systemctl stop firewalld.service

[root@ceph-01 ~]# systemctl stop NetworkManager

[root@ceph-01 ~]# systemctl disable firewalld.service

[root@ceph-01 ~]# systemctl disable NetworkManager

[root@ceph-01 ~]# sed -i 's/enforcing/disabled/g' /etc/selinux/config

[root@ceph-01 ~]# reboot ##更新内核和selinux配置文件修改,要重启后生效安装ceph集群:

1、安装ceph-deploy、ceph 、ceph-radosgw软件包。

[root@ceph-01 ~]# yum install ceph-deploy ceph ceph-radosgw -y

[root@ceph-01 ~]# ceph-deploy --version

2.0.1

[root@ceph-01 ~]# ceph -v

ceph version 12.2.13 (584a20eb0237c657dc0567da126be145106aa47e) luminous (stable)

2、搭建集群。

[root@ceph-01 ceph]# ceph-deploy new ceph-01 ceph-02

##创建mon

[root@ceph-01 ceph]# ceph-deploy mon create-initial ##初始化,收集秘钥

[root@ceph-01 ceph]# ceph -s ##查看集群状态为HEALTH_OK为正常

cluster:

id: 59b9b684-be68-4356-a2ec-d08ceb6f9c1e

health: HEALTH_OK

services:

mon: 2 daemons, quorum ceph-01,ceph-02

mgr: no daemons active

osd: 0 osds: 0 up, 0 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0B

usage: 0B used, 0B / 0B avail

pgs:

###将配置文件和admin秘钥下发到节点并给秘钥增加权限

[root@ceph-01 ceph]# ceph-deploy admin ceph-01 ceph-02

[root@ceph-01 ceph]# chmod +r ceph.client.admin.keyring

[root@ceph-02 ceph]# chmod +r ceph.client.admin.keyring

加入osd

[root@ceph-01 ceph]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 10G 0 disk

├─sda1 8:1 0 500M 0 part /boot

└─sda2 8:2 0 9.5G 0 part /

sdb 8:16 0 10G 0 disk

sdc 8:32 0 20G 0 disk

[root@ceph-01 ceph]# ceph-deploy osd create --data /dev/sdb ceph-01

[root@ceph-01 ceph]# ceph-deploy osd create --data /dev/sdc ceph-01

[root@ceph-01 ceph]# ceph-deploy osd create --data /dev/sdb ceph-02

[root@ceph-01 ceph]# ceph-deploy osd create --data /dev/sdc ceph-02

[root@ceph-01 ceph]# ceph -s ##查看ceph状态

cluster:

id: 59b9b684-be68-4356-a2ec-d08ceb6f9c1e

health: HEALTH_OK

services:

mon: 2 daemons, quorum ceph-01,ceph-02

mgr: ceph-01(active), standbys: ceph-02

osd: 3 osds: 3 up, 3 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0B

usage: 3.01GiB used, 37.0GiB / 40.0GiB avail

pgs:

ceph集群扩容:

1、扩容osd

[root@ceph-01 ceph]# ceph-deploy osd create --data /dev/sdb ceph-03

[root@ceph-01 ceph]# ceph-deploy osd create --data /dev/sdc ceph-03

[root@ceph-01 ceph]# ceph -s

cluster:

id: 59b9b684-be68-4356-a2ec-d08ceb6f9c1e

health: HEALTH_OK

services:

mon: 2 daemons, quorum ceph-01,ceph-02

mgr: ceph-01(active), standbys: ceph-02

osd: 5 osds: 5 up, 5 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0B

usage: 5.01GiB used, 65.0GiB / 70.0GiB avail

pgs:

2、扩容mon

[root@ceph-01 ceph]# cat ceph.conf ##conf文件添加ceph-03节点信息

[global]

fsid = 59b9b684-be68-4356-a2ec-d08ceb6f9c1e

mon_initial_members = ceph-01, ceph-02, ceph-03

mon_host = 10.1.1.11,10.1.1.12,10.1.1.13

auth_cluster_required = cephx

auth_service_required = cephx

auth_client_required = cephx

public network = 10.1.1.0/24 ##添加内部通信网段

[root@ceph-01 ceph]# ceph-deploy --overwrite-conf config push ceph-01 ceph-02 ceph-03 ##重新下发秘钥和配置文件

[root@ceph-01 ceph]# ceph-deploy mon add ceph-03 ##将ceph-03加入mon

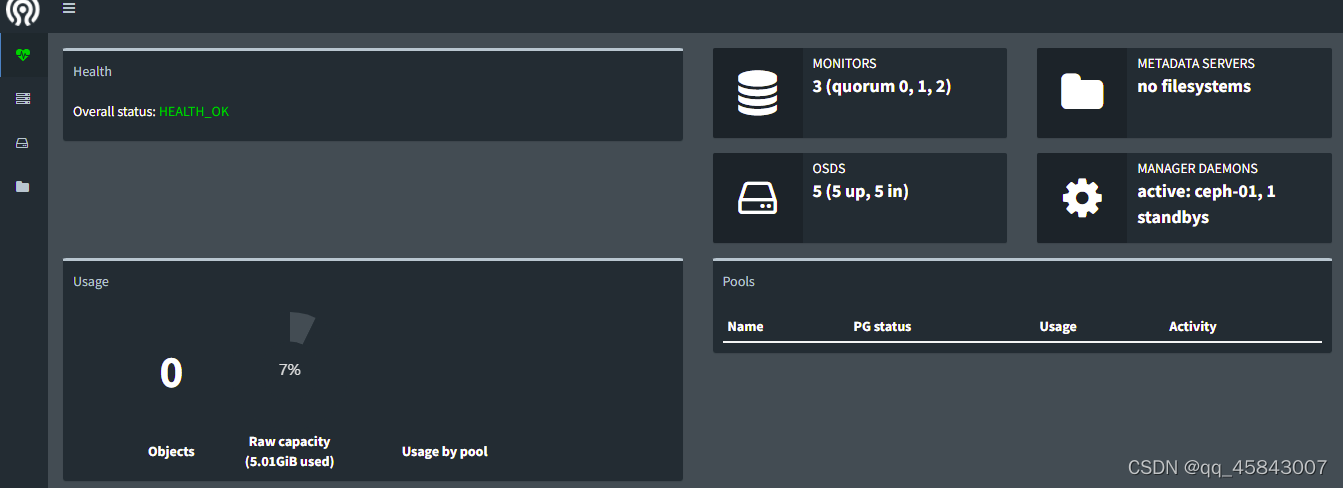

[root@ceph-01 ceph]# ceph -s

cluster:

id: 59b9b684-be68-4356-a2ec-d08ceb6f9c1e

health: HEALTH_OK

services:

mon: 3 daemons, quorum ceph-01,ceph-02,ceph-03

mgr: ceph-01(active), standbys: ceph-02

osd: 5 osds: 5 up, 5 in

data:

pools: 0 pools, 0 pgs

objects: 0 objects, 0B

usage: 5.01GiB used, 65.0GiB / 70.0GiB avail

pgs:

安装Dashboard:

##创建mgr

[root@ceph-02 ceph]# ceph-deploy mgr create ceph-01 ceph-02

##开启dashboard功能

[root@ceph-01 ceph]# ceph mgr module enable dashboard

配置客户端使用rbd:

创建kvm、images、chinder存储池,方便之后对接openstack集群。

[root@ceph-01 ceph]# ceph osd pool create kvm 128 128

[root@ceph-01 ceph]# ceph osd pool create images 128 128

[root@ceph-01 ceph]# ceph osd pool create images 128 128

[root@ceph-01 ceph]# ceph osd pool ls ##查看所有存储池

##获取现有的PG数和PGP数值(扩展)

[root@ceph-01 ceph]# ceph osd pool get kvm pg_num

[root@ceph-01 ceph]# ceph osd pool get kvm pgp_num

# 若少于5个OSD, 设置pg_num为128。

# 5~10个OSD,设置pg_num为512。

# 10~50个OSD,设置pg_num为4096。

####扩展的PG数和PGP数值(扩展)

[root@ceph-01 ceph]# ceph osd pool set kvm pg_num 512

[root@ceph-01 ceph]# ceph osd pool set kvm pgp_num 512

###初始化存储池

[root@ceph-01 ceph]# rbd pool init kvm

[root@ceph-01 ceph]# rbd pool init images

[root@ceph-01 ceph]# rbd pool init cinder

###在kvm存储池中创建一个4G的块设备

[root@ceph-01 ceph]# rbd create kvm/tz --size 4096

[root@ceph-01 ceph]# rbd info kvm/tz ##查看块设备详细信息

[root@ceph-01 ceph]# rbd list kvm ##查看kvm池中所有块设备

##创建map映射

[root@ceph-01 ceph]# rbd map kvm/tz

##创建map映射报错解决方法

[root@ceph-01 ceph]# rbd info kvm/tz ##查看映像支持特性

rbd image 'tz':

size 4GiB in 1024 objects

order 22 (4MiB objects)

block_name_prefix: rbd_data.ac956b8b4567

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

flags:

create_timestamp: Wed Mar 16 10:33:09 2022

##可以看到特性feature一栏,由于我OS的kernel只支持layering,其他都不支持,所以需要把部分不支持的特性disable掉

方法一: 直接diable这个rbd镜像的不支持的特性

[root@ceph-01 ceph]# rbd feature disable kvm/tz exclusive-lock object-map fast-diff deep-flatten

方法二:创建rbd镜像时就指明需要的特性

如:[root@ceph-01 ceph]# rbd create --size 4096 kvm/tz --image-feature layering

方法三:修改Ceph配置文件/etc/ceph/ceph.conf,在global section下,增加

rbd_default_features = 1

再创建rdb镜像

[root@ceph-01 ceph]# rbd rm kvm/tz ##删除块设备

[root@ceph-01 ceph]# rbd create --size 4096 kvm/tz

[root@ceph-01 ceph]# rbd info kvm/tz ##只有layering

rbd image 'tz':

size 4GiB in 1024 objects

order 22 (4MiB objects)

block_name_prefix: rbd_data.acbf6b8b4567

format: 2

features: layering

flags:

create_timestamp: Wed Mar 16 10:55:27 2022

[root@ceph-01 openstack]# ceph auth get-or-create client.kvm mon 'allow r' osd 'allow rwx pool=kvm ' > ceph.client.kvm.keyring ##创建kvm客户端密钥

[root@ceph-01 openstack]# ceph auth get-or-create client.tz mon 'allow r' osd 'allow rwx pool=cinder,allow rwx pool=images,allow rwx pool=kvm '> ceph.client.tz.keyring ##创建openstack密钥

[root@ceph-01 ceph]# rbd map kvm/tz

/dev/rbd0 ##映射成功

[root@ceph-01 ceph]# rbd unmap kvm/tz ##卸载映射

##其他客户端使用ceph块存储

[root@pxe yum.repos.d]# yum install -y ceph-common ## 安装客户端包

[root@ceph-01 openstack]# scp ceph.client.tz.keyring 10.1.1.14:/etc/ceph/ ##拷贝密钥到客户端

[root@pxe ceph]# ceph -s --name client.tz ##使用client.tz用户访问集群

[root@pxe ceph]# rbd map kvm/tz --name client.tz ##映射块设备到客户端

/dev/rbd0

[root@pxe ceph]# mkfs.xfs /dev/rbd0 ##格式化ceph盘

[root@pxe ~]# mkdir /tmp/aa

[root@pxe ~]# mount /dev/rbd0 /tmp/aa/

到这里ceph集群搭建完成