海康威视相机-LINUX SDK 开发

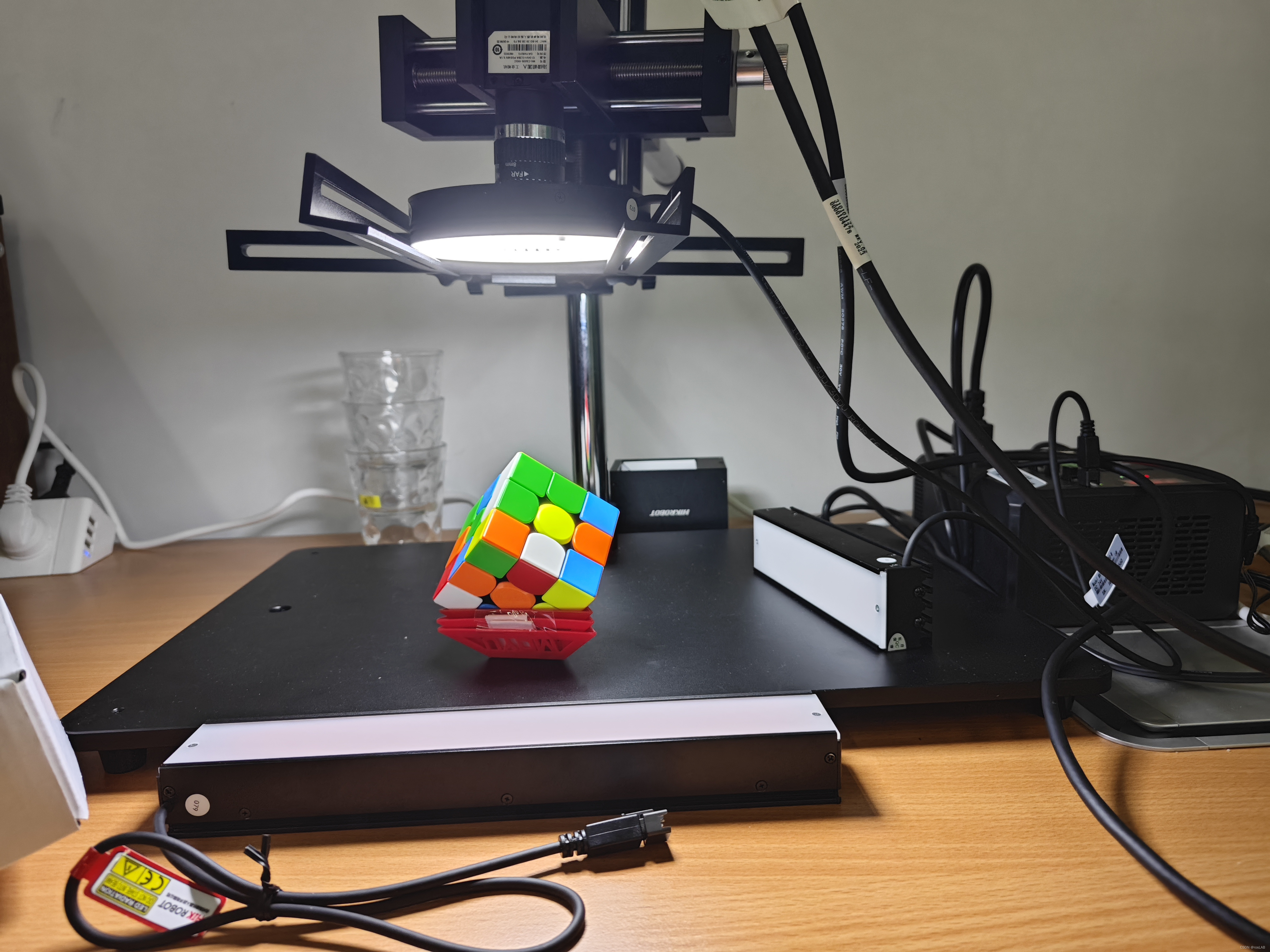

硬件与环境

相机: MV-CS020-10GC

系统:UBUNTU 22.04

语言:C++

工具:cmake

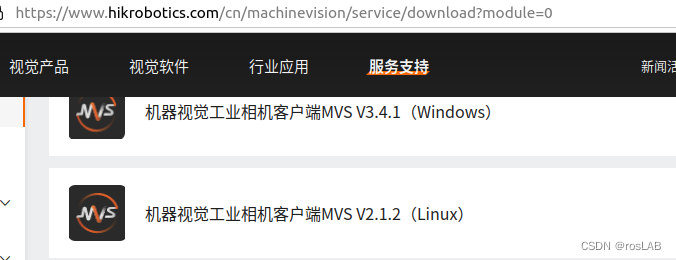

海康官网下载SDK

运行下面的命令进行安装

sudo dpkg -i MVSXXX.deb

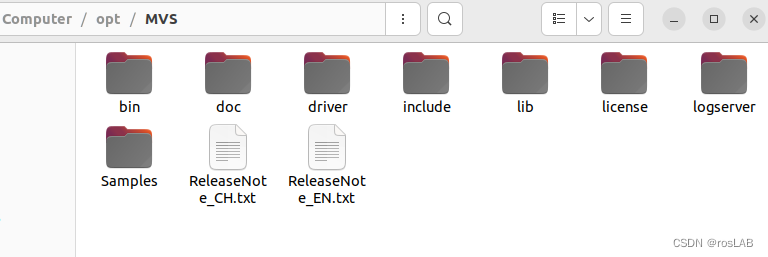

安装完成后从在/opt/MVS 路径下就有了相关的库,实际上我们开发的时候只需要lib和include。有兴趣的同学也可以尝试以下Samples的例子。make一下就能生成可执行文件。如果make报错,可能环境变量没有设置好,到bin文件夹下把那几个设置环境变量的shell脚本运行一下再试一试。

make

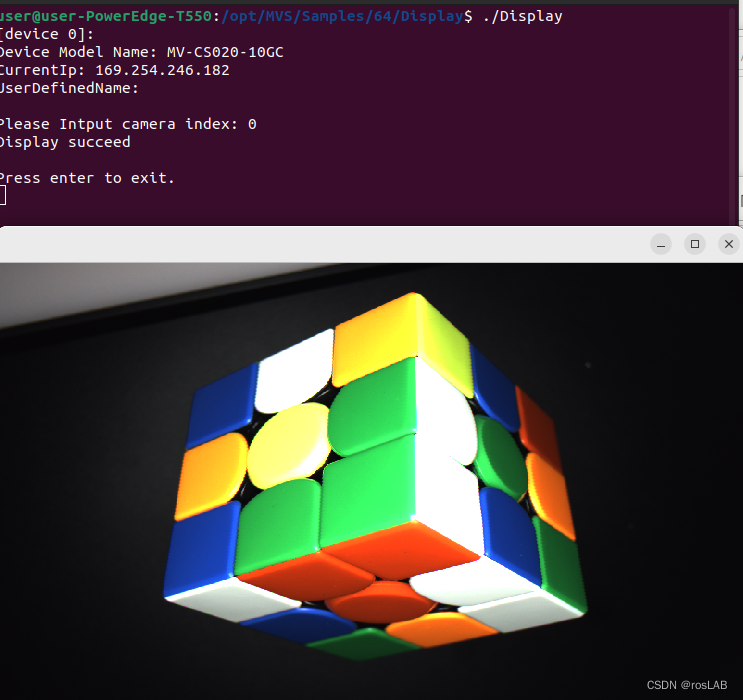

这里是我运行了/opt/MVS/Samples/64/Display下的例子。

开发

实际上我们开发的时候只需要目录/opt/MVS/lib和/opt/MVS/include下的文件。他们是海康提供的链接库。所以我们在写程序的时候链接到海康的库,我们就能调用海康官方的接口了。

我将海康的库放在我工程的3rdPartys下,这样就移植到其他电脑上会比较方便。

add_library(

cameraAPI

SHARED

)

# Define preprocessor macro for exporting symbols on Windows

if(WIN32)

target_compile_definitions(cameraAPI PRIVATE cameraAPI_EXPORTS)

endif()

message( target name: cameraAPI )

target_include_directories(

cameraAPI PRIVATE

${OpenCV_INCLUDE_DIRS}

./include

./3rdPartys/mvsinclude

)

target_sources(

cameraAPI PRIVATE

./src/edge_camera.cpp

)

target_link_directories(

cameraAPI PUBLIC

${OpenCV_LIBS}

./3rdPartys/mvslib/64

)

target_link_libraries(

cameraAPI PRIVATE

${OpenCV_LIBS}

MvCameraControl

pthread

)

主要的程序

//

// Created by zc on 8/24/23.

//

#include "edge_camera.h"

EDGE_CAMERA::EDGE_CAMERA() {

std::cout<<"EDGE_CAMERA BEGIN!"<<std::endl;

}

EDGE_CAMERA::~EDGE_CAMERA(){

std::cout<<"EDGE_CAMERA FINISH!"<<std::endl;

}

// print the discovered devices' information

void EDGE_CAMERA::PrintDeviceInfo(MV_CC_DEVICE_INFO* pstMVDevInfo)

{

if (nullptr == pstMVDevInfo)

{

printf(" NULL info.nn");

return;

}

if (MV_GIGE_DEVICE == pstMVDevInfo->nTLayerType)

{

unsigned int nIp1 = ((pstMVDevInfo->SpecialInfo.stGigEInfo.nCurrentIp & 0xff000000) >> 24);

unsigned int nIp2 = ((pstMVDevInfo->SpecialInfo.stGigEInfo.nCurrentIp & 0x00ff0000) >> 16);

unsigned int nIp3 = ((pstMVDevInfo->SpecialInfo.stGigEInfo.nCurrentIp & 0x0000ff00) >> 8);

unsigned int nIp4 = (pstMVDevInfo->SpecialInfo.stGigEInfo.nCurrentIp & 0x000000ff);

// en:Print current ip and user defined name

printf(" IP: %d.%d.%d.%dn" , nIp1, nIp2, nIp3, nIp4);

printf(" UserDefinedName: %sn" , pstMVDevInfo->SpecialInfo.stGigEInfo.chUserDefinedName);

printf(" Device Model Name: %snn", pstMVDevInfo->SpecialInfo.stGigEInfo.chModelName);

}

else if (MV_USB_DEVICE == pstMVDevInfo->nTLayerType)

{

printf(" UserDefinedName: %sn", pstMVDevInfo->SpecialInfo.stUsb3VInfo.chUserDefinedName);

printf(" Device Model Name: %snn", pstMVDevInfo->SpecialInfo.stUsb3VInfo.chModelName);

}

else

{

printf(" Not support.nn");

}

}

// en:Convert pixel arrangement from RGB to BGR

void EDGE_CAMERA::RGB2BGR( unsigned char* pRgbData, unsigned int nWidth, unsigned int nHeight )

{

if ( nullptr == pRgbData )

{

return;

}

// RGB TO BGR

for (unsigned int j = 0; j < nHeight; j++)

{

for (unsigned int i = 0; i < nWidth; i++)

{

unsigned char red = pRgbData[j * (nWidth * 3) + i * 3];

pRgbData[j * (nWidth * 3) + i * 3] = pRgbData[j * (nWidth * 3) + i * 3 + 2];

pRgbData[j * (nWidth * 3) + i * 3 + 2] = red;

}

}

}

// en:Convert data stream to Mat format then save image

bool EDGE_CAMERA::Convert2Mat(MV_FRAME_OUT_INFO_EX *pstImageInfo, unsigned char *pData, cv::Mat &img)

{

if (nullptr == pstImageInfo || nullptr == pData)

{

printf("NULL info or data.n");

return false;

}

cv::Mat srcImage;

if ( PixelType_Gvsp_Mono8 == pstImageInfo->enPixelType ) // Mono8

{

srcImage = cv::Mat(pstImageInfo->nHeight, pstImageInfo->nWidth, CV_8UC1, pData);

}

else if ( PixelType_Gvsp_RGB8_Packed == pstImageInfo->enPixelType ) // RGB8

{

RGB2BGR(pData, pstImageInfo->nWidth, pstImageInfo->nHeight);

srcImage = cv::Mat(pstImageInfo->nHeight, pstImageInfo->nWidth, CV_8UC3, pData);

}

else if(PixelType_Gvsp_BayerRG8 == pstImageInfo->enPixelType) // BayerRG8

{

printf("pPixelType_Gvsp_BayerRG8 type is converted to Matn");

//RGB2BGR(pData, pstImageInfo->nWidth, pstImageInfo->nHeight);

srcImage = cv::Mat(pstImageInfo->nHeight, pstImageInfo->nWidth, CV_8UC1, pData);

// srcImage.create(srcImage.rows,srcImage.cols,CV_8UC3);

cvtColor(srcImage, srcImage, cv::COLOR_BayerRG2RGB);

}

else

{

printf("Unsupported pixel formatn");

return false;

}

if ( nullptr == srcImage.data )

{

printf("Creat Mat failed.n");

return false;

}

try

{

// en:Save converted image in a local file

img = srcImage;

cv::imwrite("Image_Mat.bmp", srcImage);

}

catch (cv::Exception& ex)

{

fprintf(stderr, "Exception in saving mat image: %sn", ex.what());

}

srcImage.release();

return true;

}

// en:Convert data stream in Ipl format then save image

bool EDGE_CAMERA::Convert2Ipl(MV_FRAME_OUT_INFO_EX* pstImageInfo, unsigned char * pData)

{

if (nullptr == pstImageInfo || nullptr == pData)

{

printf("NULL info or data.n");

return false;

}

IplImage* srcImage = nullptr;

if ( PixelType_Gvsp_Mono8 == pstImageInfo->enPixelType ) // Mono8????

{

srcImage = cvCreateImage(cvSize(pstImageInfo->nWidth, pstImageInfo->nHeight), IPL_DEPTH_8U, 1);

}

else if ( PixelType_Gvsp_RGB8_Packed == pstImageInfo->enPixelType ) // RGB8????

{

RGB2BGR(pData, pstImageInfo->nWidth, pstImageInfo->nHeight);

srcImage = cvCreateImage(cvSize(pstImageInfo->nWidth, pstImageInfo->nHeight), IPL_DEPTH_8U, 3);

}

else

{

printf("Unsupported pixel formatn");

return false;

}

if ( nullptr == srcImage )

{

printf("Creat IplImage failed.n");

return false;

}

srcImage->imageData = (char *)pData;

try

{

// en:Save converted image in a local file

cv::Mat cConvertImage = cv::cvarrToMat(srcImage);

cv::imwrite("Image_Ipl.bmp", cConvertImage);

cConvertImage.release();

}

catch (cv::Exception& ex)

{

fprintf(stderr, "Exception in saving IplImage: %sn", ex.what());

}

cvReleaseImage(&srcImage);

return true;

}

void EDGE_CAMERA::findCameras(){

int nRet = MV_OK;

//void* handle = nullptr;

//unsigned char * pData = nullptr;

//MV_CC_DEVICE_INFO_LIST _stDeviceList;

memset(&_stDeviceList, 0, sizeof(MV_CC_DEVICE_INFO_LIST));

// en:Enum device

do {

nRet = MV_CC_EnumDevices(MV_GIGE_DEVICE | MV_USB_DEVICE, &_stDeviceList);

if (MV_OK != nRet) {

printf("Enum Devices fail! nRet [0x%x]n", nRet);

break;

}

// en:Show devices

if (_stDeviceList.nDeviceNum > 0) {

for (unsigned int i = 0; i < _stDeviceList.nDeviceNum; i++) {

printf("[device %d]:n", i);

MV_CC_DEVICE_INFO *pDeviceInfo = _stDeviceList.pDeviceInfo[i];

if (nullptr == pDeviceInfo) {

break;

}

PrintDeviceInfo(pDeviceInfo);

}

} else {

printf("Find No Devices!n");

break;

}

}while(false);

}

void EDGE_CAMERA::connectCameras(){

for(int device = 0; device < _stDeviceList.nDeviceNum; device++){

if (!MV_CC_IsDeviceAccessible(_stDeviceList.pDeviceInfo[device], MV_ACCESS_Exclusive))

{

PrintDeviceInfo(_stDeviceList.pDeviceInfo[device]);

printf("Can't connect %u! ", _stDeviceList.pDeviceInfo[device]->nMacAddrLow);

continue;

}else{

void *handle;

PrintDeviceInfo(_stDeviceList.pDeviceInfo[device]);

printf("connect %10u!n", _stDeviceList.pDeviceInfo[device]->nMacAddrLow);

int nRet = MV_CC_CreateHandle(&handle, _stDeviceList.pDeviceInfo[device]);

_handlesCameraInfos.push_back({handle,nRet}); //save the handle to handlesCameraInfos

if (MV_OK != nRet)

{

printf("Create Handle fail! nRet [0x%x]n", nRet);

}

}

}

}

void EDGE_CAMERA::initCamera(void *handle, int createRetStatus, unsigned int cameraIndex) {

int nRet;

unsigned char * pData = nullptr;

do{

if (createRetStatus != MV_OK)

break;

handle = handle;

// en:Open device

nRet = MV_CC_OpenDevice(handle);

if (MV_OK != nRet) {

printf("Open Device fail! nRet [0x%x]n", nRet);

break;

}

// en:Detection network optimal package size(It only works for the GigE camera)

if (MV_GIGE_DEVICE == _stDeviceList.pDeviceInfo[cameraIndex]->nTLayerType) {

int nPacketSize = MV_CC_GetOptimalPacketSize(handle);

if (nPacketSize > 0) {

nRet = MV_CC_SetIntValue(handle, "GevSCPSPacketSize", nPacketSize);

if (MV_OK != nRet) {

printf("Warning: Set Packet Size fail! nRet [0x%x]!", nRet);

}

} else {

printf("Warning: Get Packet Size fail! nRet [0x%x]!", nPacketSize);

}

}

//en:Set trigger mode as off

nRet = MV_CC_SetEnumValue(handle, "TriggerMode", 0);

if (MV_OK != nRet) {

printf("Set Trigger Mode fail! nRet [0x%x]n", nRet);

break;

}

// en:Get payload size

MVCC_INTVALUE stParam;

memset(&stParam, 0, sizeof(MVCC_INTVALUE));

nRet = MV_CC_GetIntValue(handle, "PayloadSize", &stParam);

if (MV_OK != nRet) {

printf("Get PayloadSize fail! nRet [0x%x]n", nRet);

break;

}

unsigned int nPayloadSize = stParam.nCurValue;

// en:Init image info

MV_FRAME_OUT_INFO_EX stImageInfo = {0};

memset(&stImageInfo, 0, sizeof(MV_FRAME_OUT_INFO_EX));

pData = (unsigned char *) malloc(sizeof(unsigned char) * (nPayloadSize));

if (nullptr == pData) {

printf("Allocate memory failed.n");

break;

}

memset(pData, 0, sizeof(pData));

// en:Start grab image

nRet = MV_CC_StartGrabbing(handle);

if (MV_OK != nRet) {

printf("Start Grabbing fail! nRet [0x%x]n", nRet);

break;

}

_camerasDatas.push_back({handle,pData,nPayloadSize,stImageInfo});

// en:Get one frame from camera with timeout=1000ms

/* while (true) {

nRet = MV_CC_GetOneFrameTimeout(handle, pData, nPayloadSize, &stImageInfo, 1000);

if (MV_OK == nRet) {

printf("Get One Frame: Width[%d], Height[%d], FrameNum[%d]n",

stImageInfo.nWidth, stImageInfo.nHeight, stImageInfo.nFrameNum);

} else {

printf("Get Frame fail! nRet [0x%x]n", nRet);

break;

}

// en:Convert image data

bool bConvertRet = false;

cv::Mat img;

bConvertRet = Convert2Mat(&stImageInfo, pData, img);

cv::namedWindow("img", cv::WINDOW_NORMAL);

cv::resizeWindow("img", cv::Size(900, 600));

imshow("img", img);

cv::waitKey(20);

}*/

}while(false);

/* // en:Stop grab image

nRet = MV_CC_StopGrabbing(handle);

if (MV_OK != nRet)

{

printf("Stop Grabbing fail! nRet [0x%x]n", nRet);

break;

}

// en:Close device

nRet = MV_CC_CloseDevice(handle);

if (MV_OK != nRet)

{

printf("ClosDevice fail! nRet [0x%x]n", nRet);

break;

}

// en:Input the format to convert

printf("n[0] OpenCV_Matn");

printf("[1] OpenCV_IplImagen");

int nFormat = 0;*/

}

void EDGE_CAMERA::initAllCameras(){

unsigned int nCameraIndex = _handlesCameraInfos.size();

unsigned int cameraIndex = 0;

for(auto handlesCameraInfo:_handlesCameraInfos){

initCamera(handlesCameraInfo.handle, handlesCameraInfo.createRetStatus, cameraIndex);

cameraIndex++;

}

}

void EDGE_CAMERA::disPlay() {

//initCamera(_handlesCameraInfos[0].handle, _handlesCameraInfos[0].createRetStatus, 0);

std::string winName = "img";

cv::namedWindow(winName, cv::WINDOW_NORMAL);

cv::resizeWindow(winName, cv::Size(900, 600));

while (cv::waitKey(50) != 'q') {

unsigned int cameraIndex = 0;

int nRet = MV_CC_GetOneFrameTimeout(_camerasDatas[0].handle, _camerasDatas[0].pData, _camerasDatas[0].nPayloadSize, &_camerasDatas[0].stImageInfo, 1000);

if (MV_OK == nRet) {

printf("Get One Frame: Width[%d], Height[%d], FrameNum[%d]n",

_camerasDatas[0].stImageInfo.nWidth, _camerasDatas[0].stImageInfo.nHeight, _camerasDatas[0].stImageInfo.nFrameNum);

} else {

printf("Get Frame fail! nRet [0x%x]n", nRet);

break;

}

// en:Convert image data

bool bConvertRet = false;

cv::Mat img;

bConvertRet = Convert2Mat(&_camerasDatas[0].stImageInfo, _camerasDatas[0].pData, img);

imshow(winName, img);

cv::waitKey(20);

}

}

bool EDGE_CAMERA::getAndProcessImg() {

int nRet = MV_OK;

void* handle = nullptr;

unsigned char * pData = nullptr;

do

{

MV_CC_DEVICE_INFO_LIST stDeviceList;

memset(&stDeviceList, 0, sizeof(MV_CC_DEVICE_INFO_LIST));

// en:Enum device

nRet = MV_CC_EnumDevices(MV_GIGE_DEVICE | MV_USB_DEVICE, &stDeviceList);

if (MV_OK != nRet)

{

printf("Enum Devices fail! nRet [0x%x]n", nRet);

break;

}

// en:Show devices

if (stDeviceList.nDeviceNum > 0)

{

for (unsigned int i = 0; i < stDeviceList.nDeviceNum; i++)

{

printf("[device %d]:n", i);

MV_CC_DEVICE_INFO* pDeviceInfo = stDeviceList.pDeviceInfo[i];

if (nullptr == pDeviceInfo)

{

break;

}

PrintDeviceInfo(pDeviceInfo);

}

}

else

{

printf("Find No Devices!n");

break;

}

// en:Select device

unsigned int nIndex = 0;

while (true)

{

printf("Please Input camera index(0-%d): ", stDeviceList.nDeviceNum - 1);

if (1 == scanf("%d", &nIndex))

{

while (getchar() != 'n')

{

;

}

if (nIndex >= 0 && nIndex < stDeviceList.nDeviceNum)

{

if (!MV_CC_IsDeviceAccessible(stDeviceList.pDeviceInfo[nIndex], MV_ACCESS_Exclusive))

{

printf("Can't connect! ");

continue;

}

break;

}

}

else

{

while (getchar() != 'n')

{

;

}

}

}

// en:Create handle

nRet = MV_CC_CreateHandle(&handle, stDeviceList.pDeviceInfo[nIndex]);

if (MV_OK != nRet)

{

printf("Create Handle fail! nRet [0x%x]n", nRet);

break;

}

// en:Open device

nRet = MV_CC_OpenDevice(handle);

if (MV_OK != nRet)

{

printf("Open Device fail! nRet [0x%x]n", nRet);

break;

}

// en:Detection network optimal package size(It only works for the GigE camera)

if (MV_GIGE_DEVICE == stDeviceList.pDeviceInfo[nIndex]->nTLayerType)

{

int nPacketSize = MV_CC_GetOptimalPacketSize(handle);

if (nPacketSize > 0)

{

nRet = MV_CC_SetIntValue(handle, "GevSCPSPacketSize", nPacketSize);

if (MV_OK != nRet)

{

printf("Warning: Set Packet Size fail! nRet [0x%x]!", nRet);

}

}

else

{

printf("Warning: Get Packet Size fail! nRet [0x%x]!", nPacketSize);

}

}

//en:Set trigger mode as off

nRet = MV_CC_SetEnumValue(handle, "TriggerMode", 0);

if (MV_OK != nRet)

{

printf("Set Trigger Mode fail! nRet [0x%x]n", nRet);

break;

}

// en:Get payload size

MVCC_INTVALUE stParam;

memset(&stParam, 0, sizeof(MVCC_INTVALUE));

nRet = MV_CC_GetIntValue(handle, "PayloadSize", &stParam);

if (MV_OK != nRet)

{

printf("Get PayloadSize fail! nRet [0x%x]n", nRet);

break;

}

unsigned int nPayloadSize = stParam.nCurValue;

// en:Init image info

MV_FRAME_OUT_INFO_EX stImageInfo = { 0 };

memset(&stImageInfo, 0, sizeof(MV_FRAME_OUT_INFO_EX));

pData = (unsigned char *)malloc(sizeof(unsigned char)* (nPayloadSize));

if (nullptr == pData)

{

printf("Allocate memory failed.n");

break;

}

memset(pData, 0, sizeof(pData));

// en:Start grab image

nRet = MV_CC_StartGrabbing(handle);

if (MV_OK != nRet)

{

printf("Start Grabbing fail! nRet [0x%x]n", nRet);

break;

}

// en:Get one frame from camera with timeout=1000ms

nRet = MV_CC_GetOneFrameTimeout(handle, pData, nPayloadSize, &stImageInfo, 1000);

if (MV_OK == nRet)

{

printf("Get One Frame: Width[%d], Height[%d], FrameNum[%d]n",

stImageInfo.nWidth, stImageInfo.nHeight, stImageInfo.nFrameNum);

}

else

{

printf("Get Frame fail! nRet [0x%x]n", nRet);

break;

}

// en:Stop grab image

nRet = MV_CC_StopGrabbing(handle);

if (MV_OK != nRet)

{

printf("Stop Grabbing fail! nRet [0x%x]n", nRet);

break;

}

// en:Close device

nRet = MV_CC_CloseDevice(handle);

if (MV_OK != nRet)

{

printf("ClosDevice fail! nRet [0x%x]n", nRet);

break;

}

// en:Input the format to convert

printf("n[0] OpenCV_Matn");

printf("[1] OpenCV_IplImagen");

int nFormat = 0;

while (1)

{

printf("Please Input Format to convert: ");

if (1 == scanf("%d", &nFormat))

{

if (0 == nFormat || 1 == nFormat)

{

break;

}

}

while (getchar() != 'n')

{

;

}

}

// en:Convert image data

bool bConvertRet = false;

cv::Mat img;

if (OpenCV_Mat == nFormat)

{

bConvertRet = Convert2Mat(&stImageInfo, pData, img);

}

else if (OpenCV_IplImage == nFormat)

{

bConvertRet = Convert2Ipl(&stImageInfo, pData);

}

// en:Print result

if (bConvertRet)

{

printf("OpenCV format convert finished.n");

}

else

{

printf("OpenCV format convert failed.n");

}

} while (0);

// en:Destroy handle

if (handle)

{

MV_CC_DestroyHandle(handle);

handle = nullptr;

}

// en:Free memery

if (pData)

{

free(pData);

pData = nullptr;

}

return true;

}

void EDGE_CAMERA::saveImg(std::string path,unsigned int cameraIndex){

}

Main函数

int main(int argc, char **argv){

EDGE_CAMERA camera;

camera.findCameras();

camera.connectCameras();

camera.initAllCameras();

camera.disPlay();

}

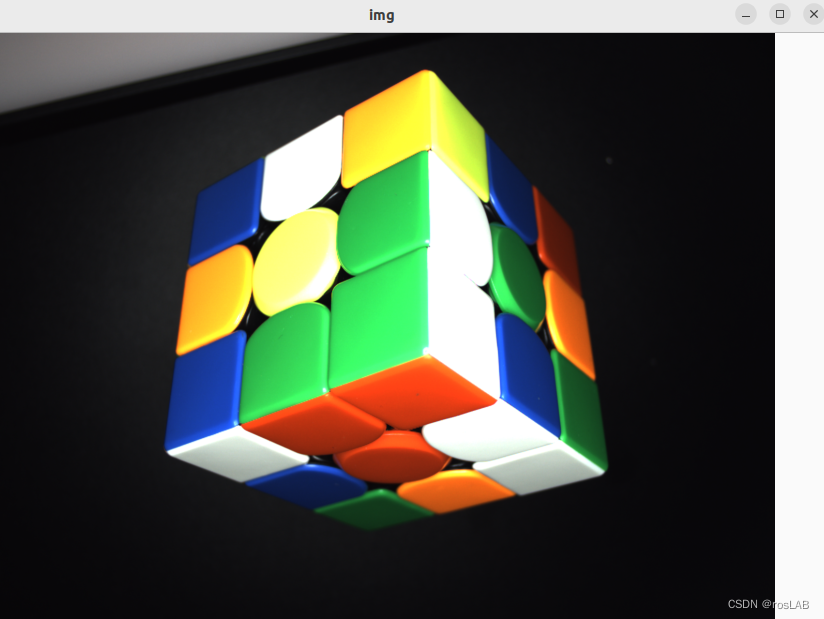

执行效果

注意

我是编译了一个动态库cameraAPI,main函数是链接的camkeraAPI。上面是把最重要的代码给贴出来了。不是完整的工程哈。